Leverage AI & HI to Uncover Themes in Harmful Gaming Interactions

About a 3 min. read

As a N00B in the insights industry, I remember my first gaming study and discovered that I could combine my curiosity for what makes humans tick with my passion for gaming. In addition, I remember opening my first set of open ends, thinking very little of it, until I read through the data. Automated software could never pick up the nuances between what farming, grinding, and camping mean in a gaming context. Several weeks, 10,000 open ends, and 10 countries later, the project was completed. That was seven years ago, and a lot has changed since. Dozens of platforms and programs use AI automation to categorize, classify, and analyze open text, saving hours for insight professionals. However, I have always been skeptical of AIs’ ability to pick up on slang in context, especially among certain communities.

Every industry has its own set of jargon (Financial Services, Technology, Hospitality) and gaming is no different, as every gamer, friend of a gamer, or industry professional knows. I selectively use the word ‘gamer,’ although ‘player’ is often the preferred term in the industry for anyone who plays games. When most people think of a ‘gamer,’ they think of teenage boys entrenched in an online multiplayer shooter like CS: GO or Call of Duty – yelling at their buddies through their oversized RGB headsets. While that description applies to some, that has changed over the years. Three-quarters of all US households have someone identifying as a gamer under their roof, and the demographic breakdown of people who play games is becoming increasingly diverse. However, even in virtual worlds, pockets of the community form strange in-group and out-group dynamics. Online multiplayer games are notorious for having hopelessly toxic environments where harassment, griefing, and trolling exist.

Game companies are aware of the problem and have moral and financial reasons to make player interactions more ethical and welcoming to all. It’s only been within the past few years that we have seen moderation tools to combat the issue. Mute functions, intelligent chat and audio AI, endorsement systems, and ping systems are some of the savvy mechanics companies use against toxicity. Many companies are now a part of the Fair Play Alliance coalition of gaming and share research and techniques to encourage healthy communities. Insight professionals and academics have all validated toxicity’s detrimental effects on players, especially among new players and underrepresented groups. Most studies have relied on survey data and qualitative interviews. At CMB, we leveraged our proprietary themeAI tool to explore the subject of toxicity at scale. What themes emerge in conversation on the topic of toxicity? Do open conversations confirm past self-reported studies of the subject?

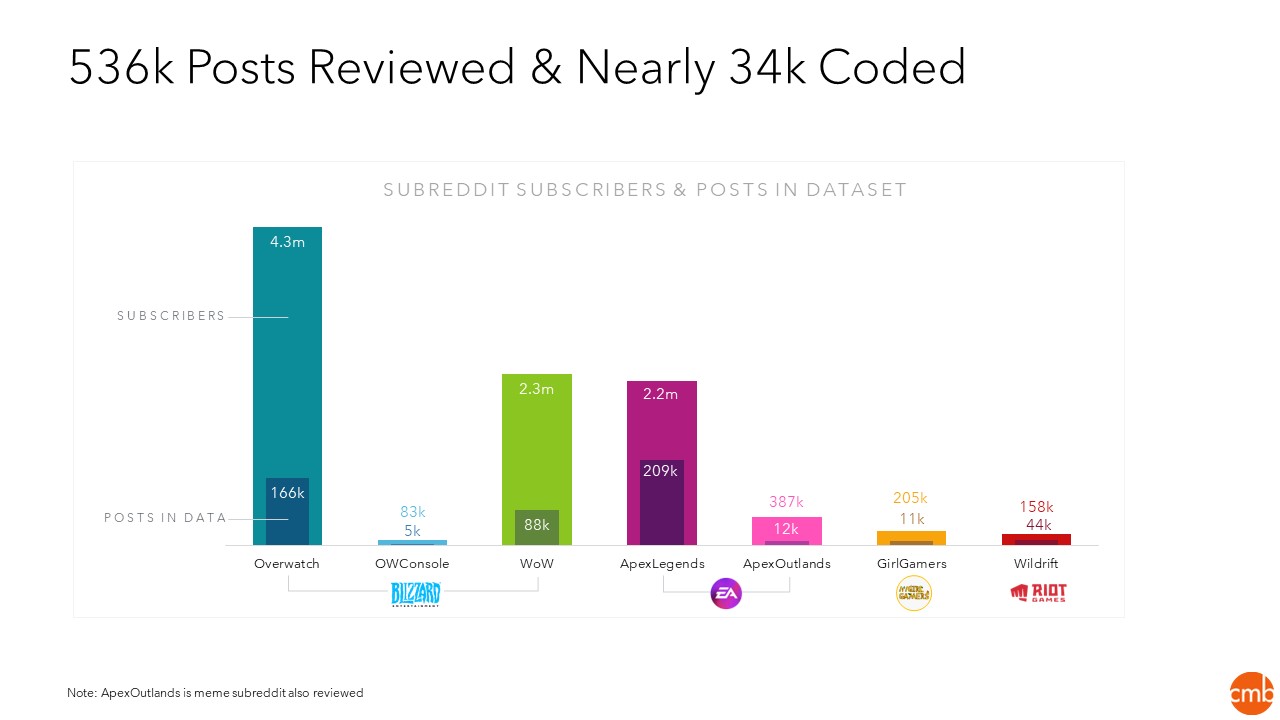

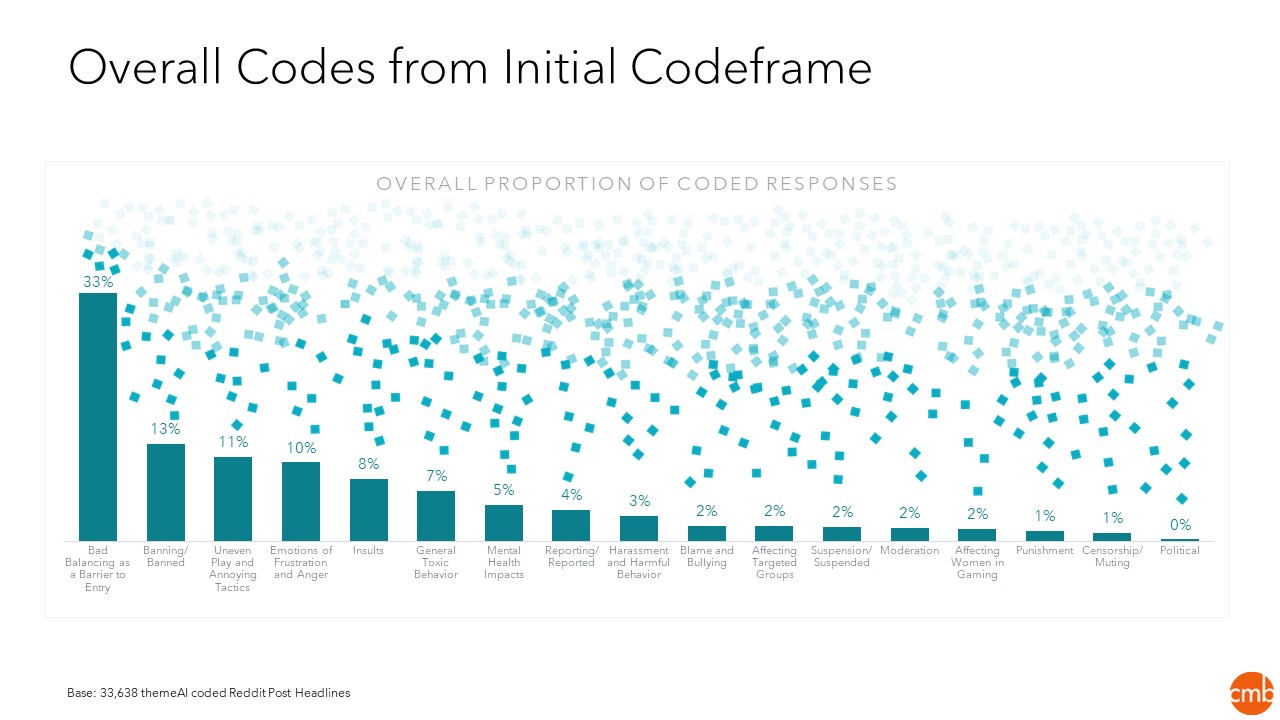

To help answer these questions, we analyzed ~526,000 toxicity-related posts and coded 34,000 sampled from Subreddit forums for popular multiplayer games. Using themeAI on headlines and comments, we developed a framework to comprehend and deepen our understanding of toxicity. While themeAI helped consume more bits of data than any human could code, as seen below in our graph, the human factor was essential to the nuances in the very colorful language of gamers.

Findings from the themeAI analysis confirmed that toxicity relates to mental health, emotions of frustration and anger, and a barrage of offensive insults. Themes censored around balanced gameplay, reporting, and moderation tools are interesting, as the latest applications to tame toxicity are new.

CMB applied a general lens across different games. However, it’s essential to tweak the framework for individual games and communities as there is no “one size fits all” approach. Each game and community are unique and faces its own set of challenges and language. themeAI proves to be adaptive as humans and fast as computers, and can apply a custom framework to any unstructured, open-ended text, such as gaming forums, and track it over time.

The next step is to apply sentiment analysis across negative themes to uncover what really brings out the worst in players. Then, take a deep dive into how players manage their community and how/when they use the current reporting and moderation tools. By building and bridging gaps in your knowledge of toxicity in gaming, professionals can iterate and build tools that create an inclusive environment. Many researchers call toxicity a ‘wicked’ problem, but the stakes are only increasing as we enter new ways to dehumanize ourselves in the metaverse.

Do you have a wicked problem you want CMB to help you think through? Do you have a passionate, online community you want to learn from? Contact me and let CMB uncover themes to help you understand your online community better.